This recap and Q+A complement our webinar, Mastering Moku: Introducing the Moku Neural Network, on November 6, 2024. If you weren’t able to attend live, you can watch the webinar on demand.

In addition to a webinar summary, we’re providing in-depth answers to select audience questions below.

Webinar recap

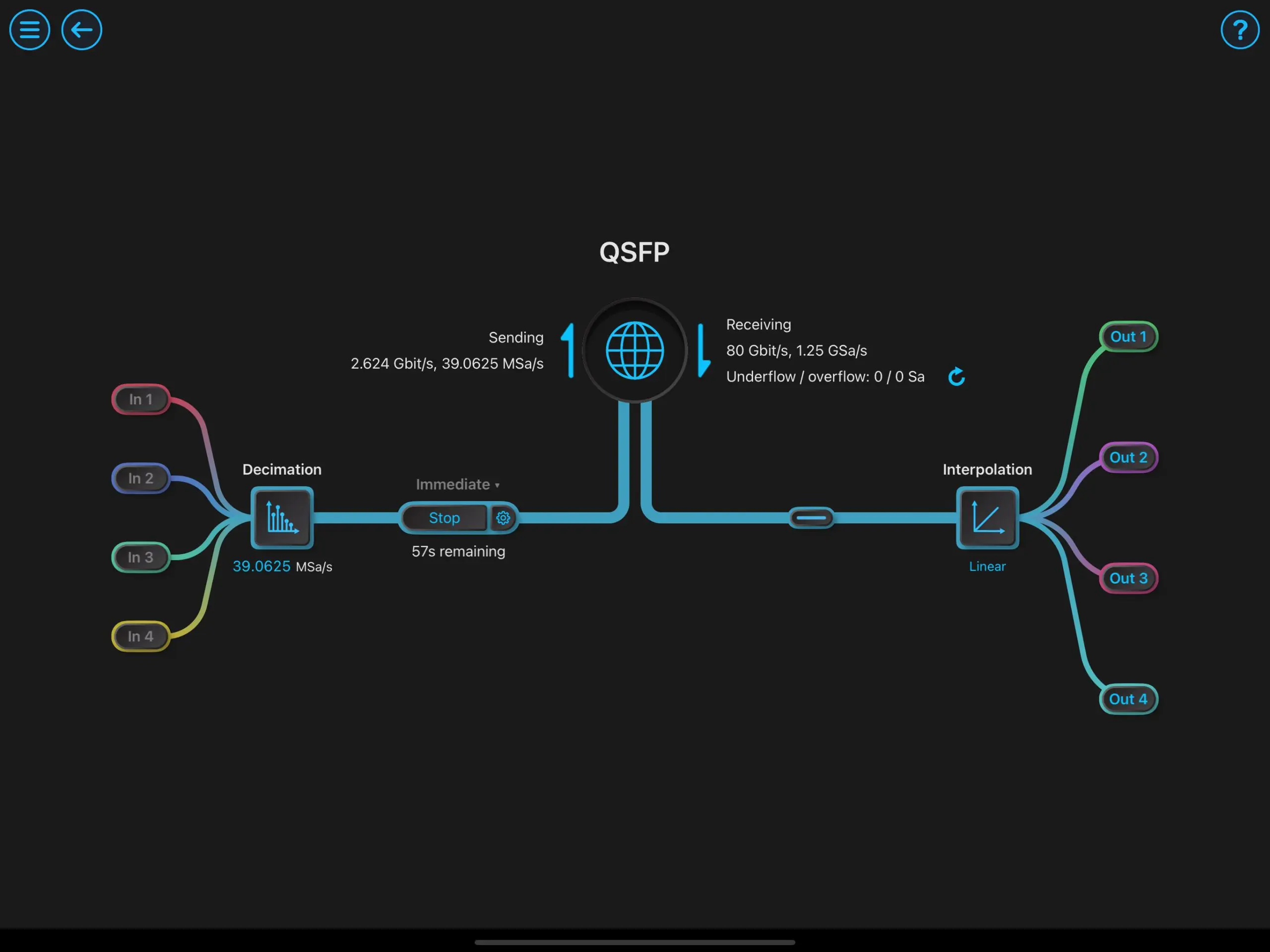

During this presentation, we introduced the new Neural Network instrument available in Multi-Instrument Mode on Moku:Pro devices. We introduced the concept of FPGA-based neural networks, including how they enable real-time, deterministic network operation.

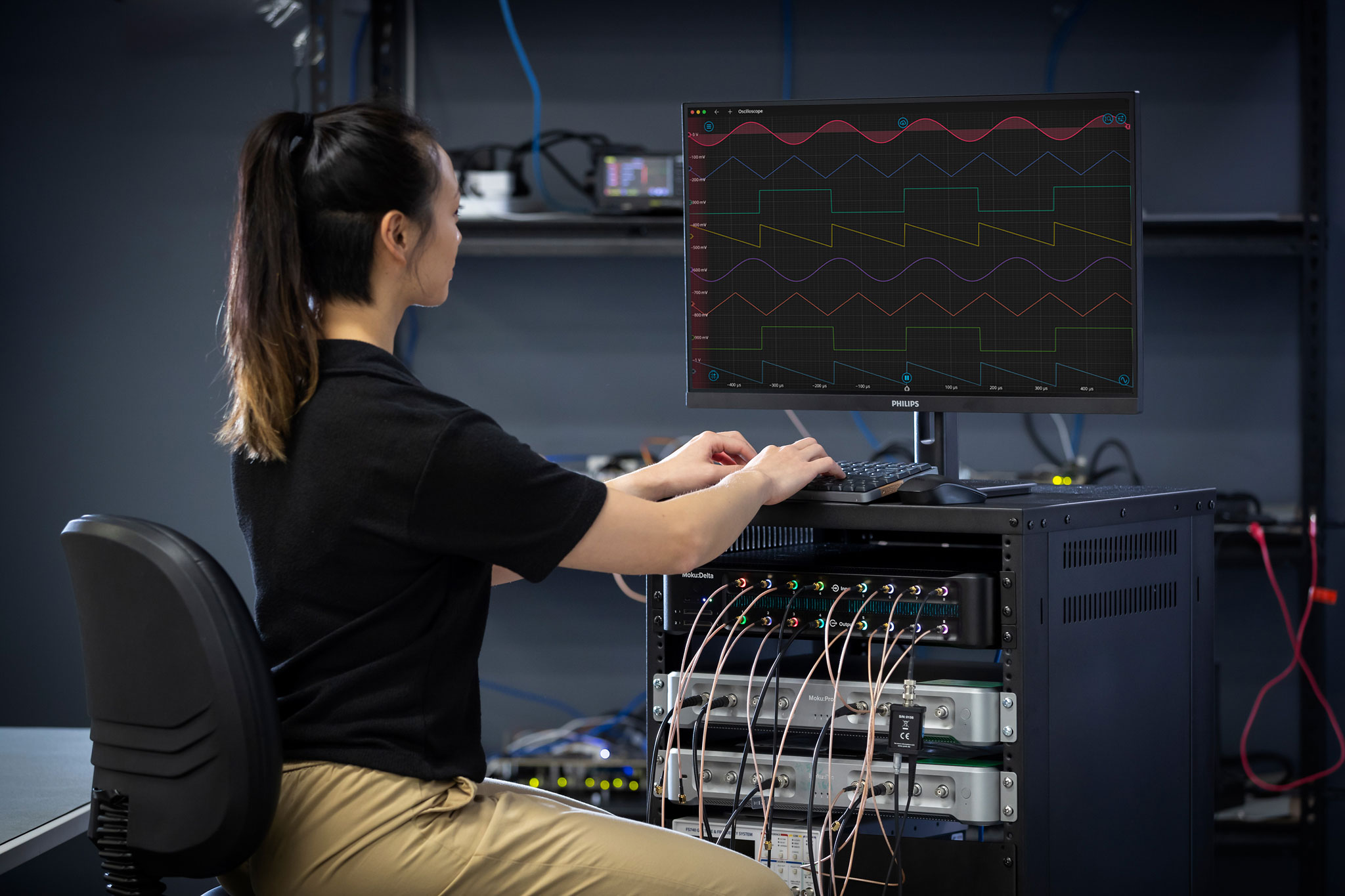

In a live demonstration, we then built, trained, and deployed two different neural network models: a weighted sum and an autoencoder, also known as a denoiser. Using these two models in the Moku Neural Network, we tested multiple waveforms to demonstrate the ability of the Neural Network instrument to make real-time inference.

Audience questions

Can you provide links to the Github examples used in the webinar?

Find the weighted sum example here, and the autoencoder example here. For a detailed walk-through of the weighted sum example, follow along in this blog.

Should the user design the model architecture in Moku:Pro prior to uploading .linn file?

The model architecture is fully defined in a Python script before uploading the .linn file to Moku:Pro. This allows you to fully define and train your network, generating a .linn file containing the required weights, biases, and model definition. Once the .linn file is uploaded to the Moku:Pro Neural Network, you can run the model in real time.

If you input two sine waves, can the Neural Network act as a lowpass filter?

Yes, this is one of the possible applications for the Moku Neural Network. Users have the choice to implement simple filters on the Moku Digital Filter Box as well, but can also train a network model to act as a lowpass filter by modifying the autoencoder example or creating their own.

How complex can the model be (e.g., transformer)?

A transformer is the type of model used for large language learning models like ChatGPT. It tracks relationships in sequential data and is able to learn context in data, like words in a sentence. These types of models are very complex and require significant training time and data. The Moku Neural Network, which currently supports fully connected networks, does not support transformer models since its purpose is signal processing. The FPGA-based architecture allows users to perform real-time signal processing and inference on discrete values and signals.

What is the limit on the size of a model in MB? Can we upload a U-net model?

The limit on the size of a neural network model that you can upload to the Moku Neural Network is more likely to come from the network dimension limits rather than a file capacity. The network dimensions allow users to upload a model of up to five densely connected layers of up to 100 neurons in each layer, amounting to just over 50,000 coefficients.

A typical U-net model requires both convolutional layers and skip layers, which we do not currently support. If you have specific requirements, let us know. We’ll be sure to capture all feature requests to consider for future software development.

Thank you for viewing our webinar. We look forward to seeing you again.

For more insightful demonstrations, check out our webinar library for on-demand viewing.

More questions?

Get answers to FAQs in our Knowledge Base

If you have a question about a device feature or instrument function, check out our extensive Knowledge Base to find the answers you’re looking for. You can also quickly see popular articles and refine your search by product or topic.

Join our User Forum to stay connected

Want to request a new feature? Have a support tip to share? From use case examples to new feature announcements and more, the User Forum is your one-stop shop for product updates, as well as connection to Liquid Instruments and our global user community.