Introduction

Wuhan University of Technology (WUT) is a Double First Class University and regularly ranked in the top 10 engineering institutes in China. WUT specializes in teaching modern and emerging engineering disciplines, such as artificial intelligence and new energy vehicles.

Moku:Go is the first software-defined instrumentation solution from Liquid Instruments tailored for engineering education and general industry. With Moku’s Multi-Instrument Mode (MiM), users can combine pairs of instruments to run simultaneously with lossless interconnection.

Moku:Go supports Moku Cloud Compile (MCC), a hosted service for the creation and deployment of digital signal processing algorithms. MCC users can design algorithms using their favorite tools such as MATLAB and Simulink, then easily deploy them to a Moku:Go device, where they run in real time alongside other software-defined instrumentation.

The challenge

WUT students were affected by the COVID-19 pandemic earlier and more seriously than many. When they were allowed to meet in person for classes, the mandatory masks and social distancing requirements made it difficult for the students to hear each other, hear the instructor, and engage with the class.

In response to this challenge, a group of students decided to design a smart conference room system with automatic tracking capabilities and real-time voice processing. The students identified four different features that they wanted from their conference room system:

- Neural network speaker identification

- Voice equalization (timbre enhancement)

- Adaptive noise cancellation

- Mask detection

The solution

The students selected Moku:Go as the best device to help build their system. This selection was based on its software-defined approach to instrumentation, which enables configuration for many different applications, along with powerful customization options like Moku Cloud Compile. They used Moku:Go for the core problems of speaker identification and adaptive noise cancellation. While voice equalization is achievable with the Moku:Go Digital Filter Box, for this project the students used a simple FPGA development board that they already had. To visually determine mask detection, students used a commercially available face detection system.

Speaker identification is a challenging task but is required to amplify the speaker’s voice while suppressing background noise. The students accomplished this by interfacing Kendryte’s powerful K210 edge computing accelerator to their Moku:Go. The K210 is a cost-effective system-on-chip, designed specifically for accelerating machine learning tasks in embedded systems. The students programmed the K210 to run a convolutional neural network, taking input signals from six microphones in an array and generating an analog voltage representing the direction of the dominant sound.

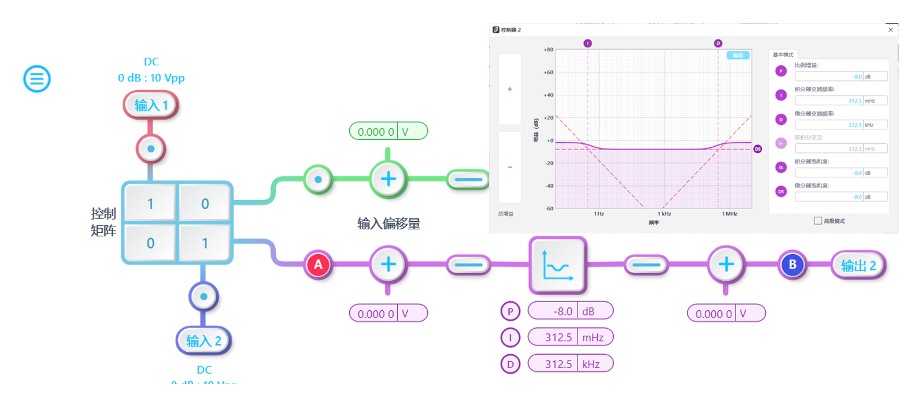

Students connected the K210’s dominant speaker direction signal to the Moku:Go PID Controller (Figure 1), along with a signal representing the actual direction in which the system’s high-fidelity microphone was pointed. The PID Controller instrument took the difference of these values to find the directional error and drove a servo motor to smoothly reposition the primary microphone to point to the speaker.

Figure 1: PID Controller configured for microphone positioning

The proportional gain dominated the tuning of the PID Controller, while the integrator and differentiator saturation features provided a small, bounded increase in gain at low and high frequency. At low frequency, the integrator compensates for systematic error and bias in the direction-finding algorithm. The small differential gain provides damping, smoothing the microphone movement.

The final stage of the students’ system was adaptive noise cancellation. This powerful technique takes two inputs: a noisy signal and a stream of environmental noise. It intelligently and automatically tunes a filter to best remove the environmental noise from the original signal. In this case, the students took the signal from the primary microphone pointed toward the speaker and sampled the environmental noise from the microphone array.

One algorithm used for noise cancellation is least mean-square (LMS) adaptive filtering. However, writing an LMS adaptive filter for a field-programmable gate array is a complex task, outside the scope of most university-level signal processing curricula. MCC bridged the gap, allowing the students to build and test the LMS algorithm from standard building blocks using MathWorks Simulink and run it on their Moku:Go device.

The result

The adaptive conference room system was a true cross-disciplinary team effort. Students familiar with neural networks, computer engineering, and control systems built and verified the speaker identification system, trained the neural network, and tuned the PID controller.

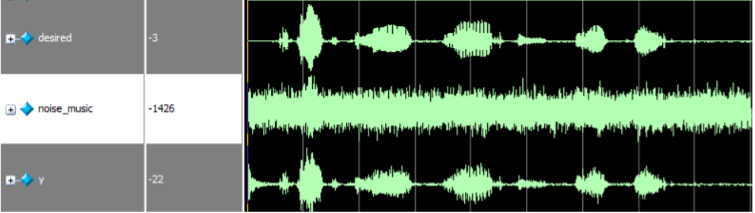

Adaptive noise cancellation proved the biggest challenge. Students used the Moku:Go Data Logger to capture signals in real situations so they could test, iterate, and refine their design in the MATLAB/Simulink ecosystem. Once they validated the system, they exported it to VHDL using HDL Coder and ran final system checks. One such simulation run is shown in Figure 2, where an ideal voice signal shown on the top row was artificially swamped with background noise (second row) before being fed to the LMS adaptive filter. The filter quickly tuned itself to the noise profile and recovered the voice (final row, Figure 2).

Figure 2: Simulated performance of the adaptive noise cancellation system VHDL on real-world data

Once validated offline – with real data – they imported their VHDL to MCC, built the design, and ran it on their Moku:Go device.

Conclusion

Moku:Go formed the core of a full adaptive conference room system that furthered students’ understanding of real-time digital signal processing (DSP) and machine learning techniques while solving a real and pressing problem. The ultimate flexibility of Moku:Go meant a single unit could be repurposed to develop control systems, filters, and complex DSP. The integration of HDL Coder and MCC was uniquely effective in the rapid testing and deployment of complex signal processing.

To learn more about Moku:Go and our courseware offerings, reach out directly to our education team at edu@liquidinstruments.com.