Machine learning for test and measurement

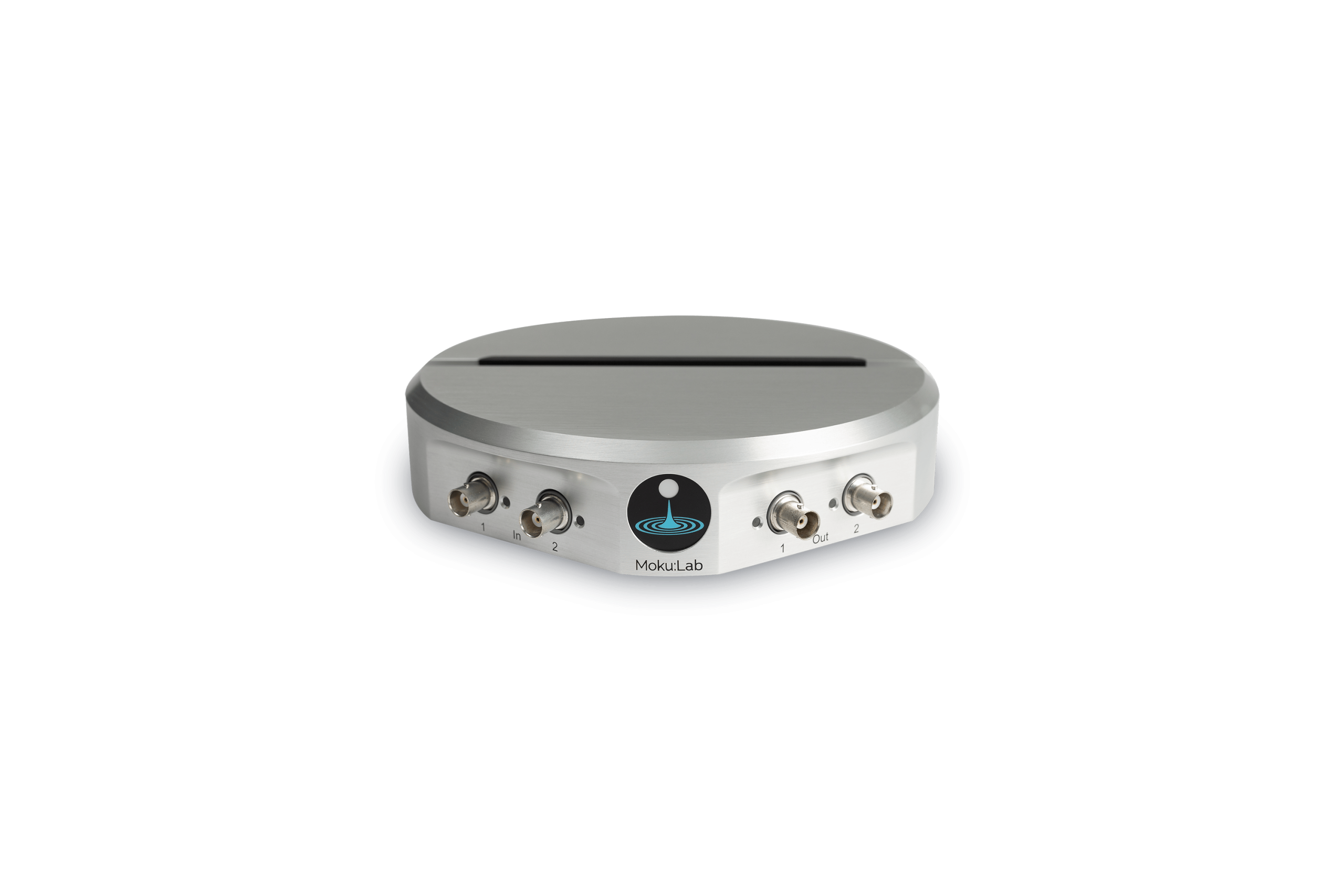

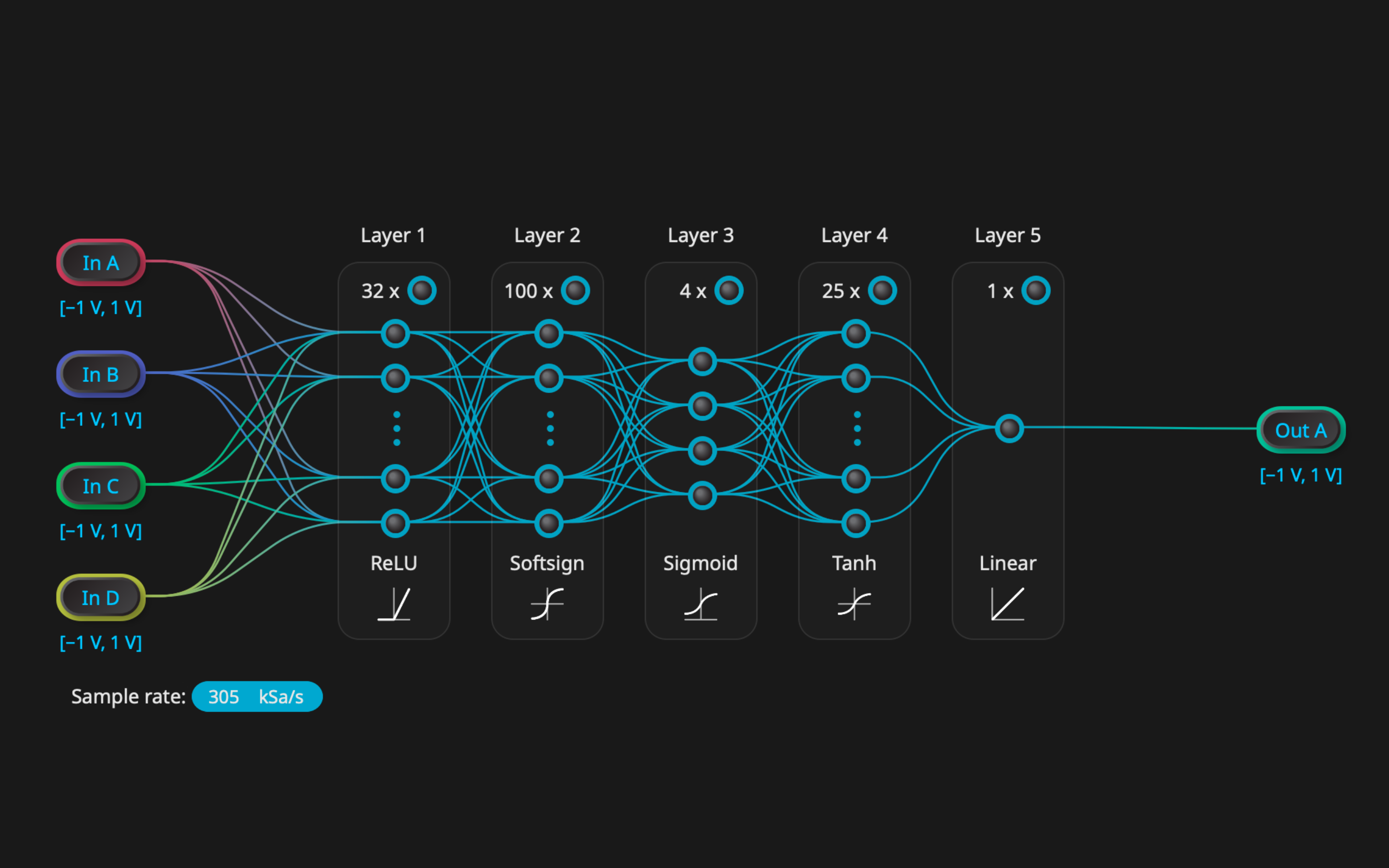

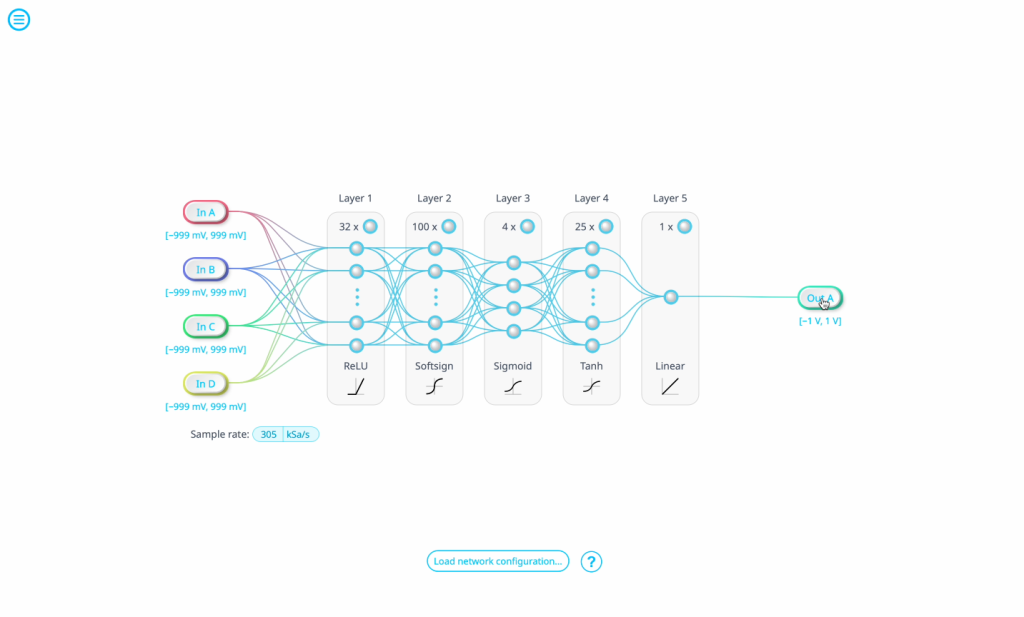

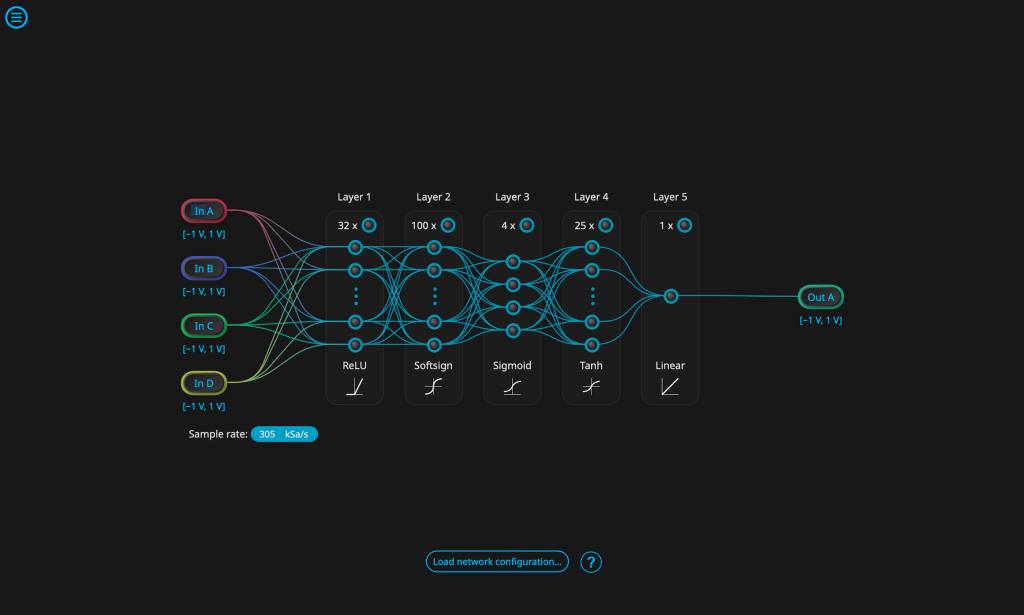

Neural networks deployed on FPGAs enable more efficient processing, real-time decision-making, and informed control system feedback. The Moku Neural Network implements deterministic data analysis algorithms for applications where timing is critical, such as quantum control, and can be reconfigured on the fly. Build and train models using Python, then deploy to your test systems using Moku to achieve low-latency inference and react quickly to changing experimental conditions.